April 18, 2023

It's important to understand that AI/ML is not a new issue: hCaptcha has developed systems to detect automation of all kinds for years, using many different approaches.

However, the services we provide are both more urgent and more challenging as AI/ML techniques get better. Legacy vendors that have failed to maintain the level of R&D required will struggle to keep up.

This is why we have always focused on research, and continuously evolve our strategies and methods to stay ahead.

Although we often publish our research at academic conferences in machine learning, we generally do not share specific details or strategies of our security measures publicly in order to protect our users.

We are making an exception to this practice below in order to help dispel some of the confusion around the true capabilities of LLMs. We have no difficulty detecting them today, and do not expect this to change any time soon.

As AI/ML gets better adversaries can adapt faster, but by the same token so do we. This is an ongoing arms race, but not a new one.

Not necessarily. To understand why, we need a key insight:

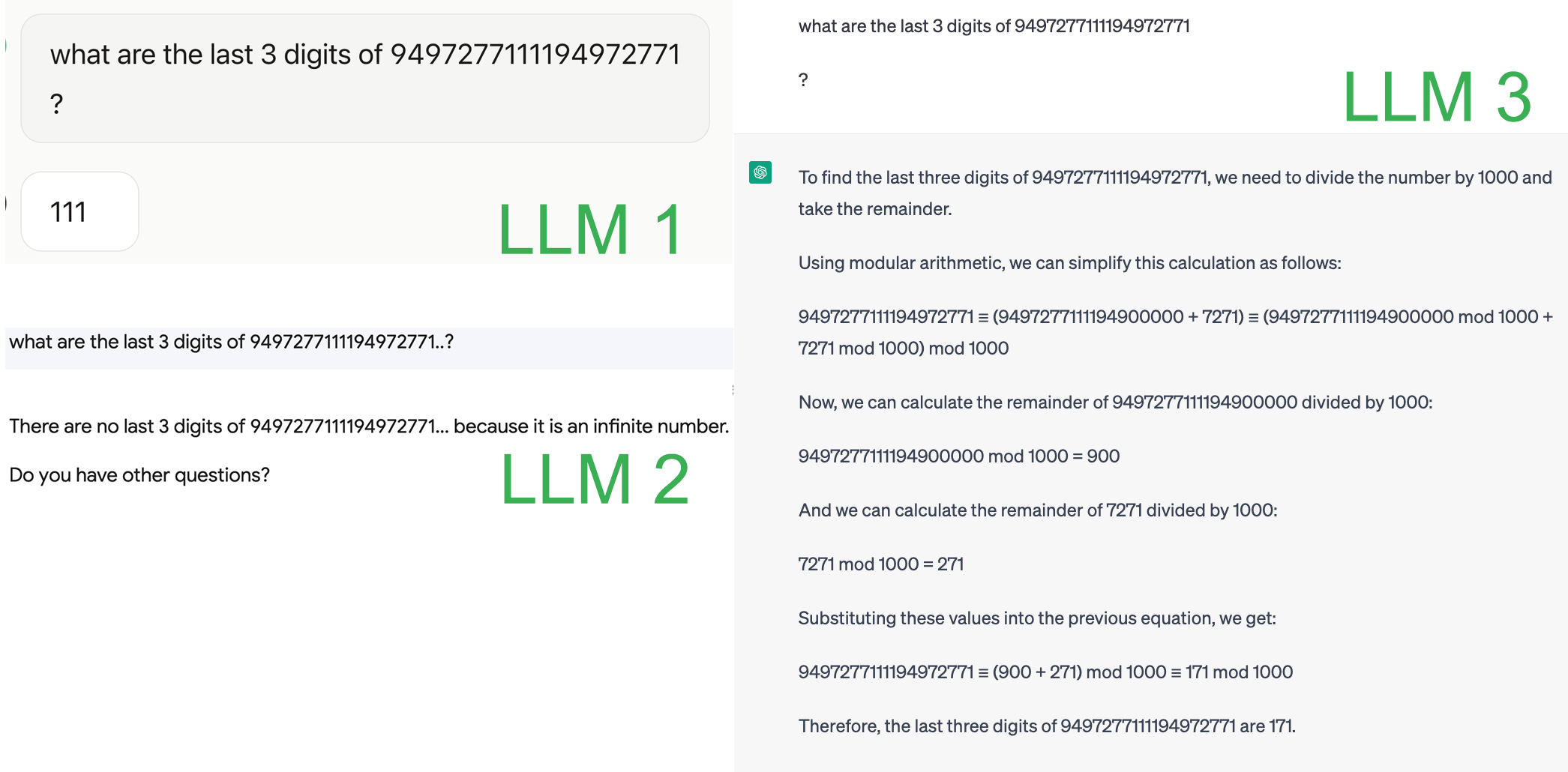

AI/ML systems make different kinds of mistakes than people.

Individual failings in AI/ML systems can be fixed, but exactly emulating human cognition is not on the near-term horizon, even once AI systems start to approach or exceed human problem-solving capacity in other ways.

This is a fundamental limitation of artificial neural networks. They are useful tools, but do not reproduce human cognition particularly well. Understanding these differences gives us many ways to detect LLMs and other models via challenges.

hCaptcha is already able to use techniques like these to reliably detect LLMs, and to identify which LLM is being used to produce the answer, as each one tends to make consistently identifiable kinds of errors.

As you may have guessed, this is hardly our only detection method; we chose it to write up as one of the simpler approaches to explain. We expect that this particular example will soon be patched due to publication of our results, but the underlying difference that allows detection is fundamental to these systems.

Check out hCaptcha Enterprise to find and stop online fraud and abuse, whether automated or human.

PS: if you're interested in these kinds of problems and the web-scale distributed systems behind them, we are hiring.